Quality Approaches - Architecture Quality

Architecture Quality

Today, I would like to focus on the solution that the team is building and how we could incorporate certain approaches to embed quality into this process. In my previous post, I discussed how we could integrate quality into User Stories. What I have advocated for in the past is the assignment of Risk ratings to a story. A risk value is a measure of how likely something negative will happen and how severe the consequences will be. One simple method to calculate a risk value is to multiply the probability of the event by the impact of the event. Assigning risk is subjective, as an item with high risk for one person might not be considered high risk by another. Therefore, conducting this exercise or assigning a Risk Rating while gathering perspectives from different team members helps mitigate bias and ensures a more well-rounded perspective in the decision-making processes.

Based on the Risk Rating we give to a story, we could look at how deep we would like to go to apply quality approaches from an architectural lens. If the Risk Rating is low, it sometimes might not make sense to do everything stated below. Many of the approaches I've identified below have resulted from my collaborations with outstanding engineers and architects in the past, shaping my perspective on this subject.

Approaches you can use to embed quality within Architecture

Solution Design is documented and addresses business requirements

While it may seem obvious, there have been a few instances where no solution design documents or diagrams were present. Documentation of the solution design ensures that everyone involved in the project, including team members, stakeholders, and decision-makers, possesses a clear and shared understanding of the intended goals of the solution. This understanding can be conveyed through various means such as documentation of the system, architecture diagrams, sequence diagrams, or dataflow diagrams; there are numerous ways to illustrate this shared understanding.

Regarding the second half of the statement, addressing business requirements, there have been occasions when we were enticed by new and shiny technology to accomplish a task. Who doesn't appreciate new tech? It might make sense in some cases, especially if you're experimenting with technology that could potentially be rolled out to other parts of the organization. However, a suitable and tested approach would be to focus on the business requirements and design a solution that meets those requirements with as little complexity as possible. This approach benefits the entire team from a maintenance perspective.

As an example, I was working on a website that essentially served as an online brochure, offering basic information about the business and a few static pages. It didn't have a substantial number of users. The team, though small, decided to implement Kubernetes to manage container orchestration for scalability and uptime management. However, I believed it was an overkill solution for our goals. The operational overhead required to become proficient with Kubernetes, along with the maintenance issues that arose, in my opinion, were not justified and outweighed the benefits it offered.

From a QA perspective, having documentation or diagrams that detail the components of a system and illustrate data flows is highly advantageous. It aids in our comprehension of the subsystems within a system that requires our attention. When determining what checks to automate, we may choose to prioritize micro-interactions between components rather than exhaustively testing the entire system. Thus, having this information proves valuable when shaping what we focus on while testing.

As an example, there was a system I needed to test where the presence of a queuing mechanism to ensure guaranteed message delivery was not immediately apparent, based on what the system was meant to do. With an architecture diagram in place, I was able to discern the existence of the queue and subsequently develop scenarios related to queue failures and retry mechanisms, to ensure that part of the system was tested.

Tools like Mermaid now enable us to save architecture diagrams as code and store them in a Git repository. I find this feature highly advantageous because it allows us to review the version history of the diagrams, providing valuable context for the architectural decisions made at different moments and the reasoning given for that particular change.

Solution design is compliant with the existing architecture in place

This is a challenge I grappled with in the past. My line of thinking revolved around the idea that if we never embrace new technology or components, how can we ascertain whether they are genuinely superior? I once belonged to a team where the core engine was intended to remain untouched while we built the surrounding systems using, at the time, serverless technology that the entire team was unfamiliar with. The considerable amount of time it took for the team to gain proficiency in utilizing this new technology, along with adapting to new deployment and development processes, ultimately led to a failure in delivering our product on time. Even after the system went live, we encountered numerous issues when it came to system maintenance, which could have been easily avoided had we adhered to technology that the team was comfortable with.

It's important to note that there is no definitive right or wrong answer in this scenario. If we had taken into account the time required for the team to acquire the necessary skills and adapt to this new technology, it could potentially have resulted in a resounding success. Also if the advantages of moving to the new architecture would significantly outweigh the benefits of the current architecture in place, in the long run, it would be a worthwhile investment. The answer to this question likely hinges on the specific goals we are striving to achieve. Conforming to an existing architecture design unquestionably ensures consistency, familiarity, and ease of maintenance. Moreover, it mitigates risks, particularly if the existing architecture has already addressed concerns related to compatibility, security, performance, and other pertinent issues. Therefore, if time is of the essence and we aim to maintain consistency within the team, it is advantageous to have new systems or components designed in compliance with the existing architecture.

Solution Design is compliant with non-functional requirements

In the realm of testing, non-functional requirements have often been given lower priority (in most teams I’ve been part of). Teams typically only address testing with non-functional requirements in mind if they have the available time. Similarly, there have been instances where adhering to architecture designed to meet non-functional requirements was not the primary focus. For instance, in a project I was involved in, our load profile requirements differed significantly from most systems. We experienced a surge in user activity for brief periods, followed by a return to normal levels of activity. Unfortunately, the architecture was not tailored to accommodate this particular requirement, resulting in recurrent downtimes during these peak load periods.

Threat Modelling is completed

I would like to emphasize that this is not a requirement for every story but rather depends on the Risk Rating assigned to the proposed story or solution. Utilizing certain diagrams, such as architecture, sequence, or data flow diagrams, proves highly beneficial in this context. These diagrams aid in comprehending our entry and exit points, as well as in identifying threats at the sub-system level. In my prior experiences, I have employed the Microsoft Threat Modeling tool, which involves adding the essential components of your architecture to the tool, subsequently providing insights into the threats that should be taken into consideration. Additionally, there exist Threat Modeling frameworks like STRIDE, CVSS, and several others that can be integrated to identify potential threats.

Threat Modeling is an integral part of our transition towards a shift-left security approach, enabling us to detect issues earlier in the Software Development Life Cycle (SDLC). Even if we do encounter security issues post-production releases, having engaged in Threat Modelling allows us to proactively address the situation, as opposed to reacting to it in a more ad-hoc manner.

Failure Mode Analysis is done

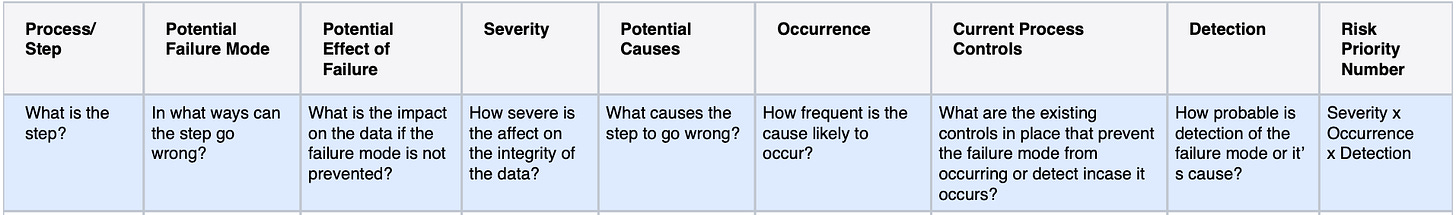

This is another technique that I wouldn't employ every time, but rather its application would be contingent on the Risk Rating assigned to the proposed story. I find Failure Mode Analysis, bears similarity to the identification of various scenarios and edge cases, encompassing the testing of multiple failure points within the system. I like conducting a Failure Mode Analysis because it compels me to concentrate on potential failures and consider strategies to address them, particularly if they are not preventable. The template provided below outlines the information I try to gather, to get a Failure Mode Analysis going.

I am eager to hear everyone's thoughts on how we can integrate quality into the architecture design process, ensuring that our designs are purposefully crafted to enable our customers to use our products seamlessly, without encountering significant issues. It's a realm in which I am not an expert, and I believe that continuous learning is the only way we will all improve in this regard.