Quality Approaches - User Story Quality

Focus on User Stories

In my last post, I talked about Quality approaches we could use to get a clear understanding built between the team to ensure we are building the right thing for the customer. Today, I will build on that and discuss User Stories and how to bake quality within them to ensure the final product meets the user’s needs.

What are User Stories

I presume most of us know what User Stories are, but for those who don’t, User Stories serve as a tool to facilitate collaboration between the team building the product and get everyone on the same page. There have been a lot of discussions about how much information we should put into User Stories, and I would say it depends on the maturity of the product and how much the product would change.

Approaches I use to embed Quality within User Stories

Use a short yet descriptive title to describe the User Story

This would seem like common sense, but in my experience, this takes some skill. When browsing through all the Stories, if I can easily understand the message the story is trying to convey, it saves a lot of time and prevents future misunderstandings.

The story is written from an End User Perspective

This is my pet peeve, but with many of the past teams I’ve worked with, we would define technical tasks, which developers crafted. This made it hard to foster a clear understanding between teams. I also saw many testers struggle to understand what tests they should consider for this type of task and what they should test. I don’t think we should eliminate these tasks as they provide value in understanding how the problem was solved on a technical basis. However, creating it as a sub-task under a story that provides value to the end user would be much more beneficial. Sometimes, it’s hard to understand what value we are providing end users (say, we are creating an API to consume). In such cases, our end user becomes the API consumer, and we write the story from that perspective. It’s still much easier to get a shared understanding when written through that lens rather than being a technical story.

The Story is Prioritised and Estimated

Having stories with good titles that are easy to understand makes prioritising it a lot easier. In the past, teams I have been part of pick stories that seem fun to work on but aren’t providing as much value to the end users. Having these priorities helps set the team's focus towards a shared goal.

Regarding estimation, I probably could write a whole article on itself, but I believe many teams don’t do this very well. For example, story points have been used as time estimates: 1 point = 1 day’s worth of effort, which defeats the purpose of assigning story points to a story. In this case, setting a time metric for the story's completion would be better than using Story points. I have seen teams getting bogged down on things that are not important, like, “Hey, this does not follow the Fibonacci sequence for estimating”, “This does not match the criteria across another team in our organisation”, and the estimation process then takes longer than building software.

What has worked well in my experience is defining an example story that the team has worked on and assigning it a story point based on the complexity it takes to complete the story. Again, this is subjective, but we have a baseline defined. If the team has been working together for a while, estimating against this baseline becomes easier as time passes. The team not getting hung up on why they didn’t meet their story point quota for the period/ sprints and more using it to guide how much work they could complete are teams that have achieved better results.

Acceptance Criteria are defined, reviewed and accepted by the team

Acceptance criteria are usually conditions or requirements that must be met for a user story to be considered complete and ready for release. Again, developing that shared understanding between the team happens when we have this defined. Most teams do this well so I won’t delve further into this. It also does help a tester at least test out most happy path scenarios if we have ACs defined.

Test Scenarios & Edge cases are identified and reviewed

I don’t see a lot of teams doing this, but if we want to shift testing left, identifying the tests, given all the information we have about the story and the system, is an excellent place to start. Testers like to achieve this in different ways, but I have recently used John Ferguson Smart’s Feature Mapping technique to identify what I will test for a given feature. I won’t go into much detail, but essentially, we have a collaborative session (the Three Amigos) where we go through a feature and map out real-world examples of the different pathways the feature could lead down. Identifying these examples has helped the team ask several questions, allowing us to identify the alternative flows a user may take to achieve the outcome the feature wishes to achieve. Please take a look at John’s work here for more information. As our requirements change, so will our scenarios, but I feel we have a good starting point to work off and plan what we will test.

How do we get started?

Writing good Stories is hard. When I first started in Software Development, I thought this would be easy to do, but it takes quite a bit of time to write it well. Dividing the stories into vertical slices, keeping them small and keeping the end user in mind requires deep thinking. Ideally, when things constantly change in fast-moving environments, we should only look to add a little information to stories because they may change constantly. Sometimes, just having a clear and concise title is good enough. In other cases, using the INVEST Acronym to ensure quality is baked into a story is good.

I needed help remembering the acronym and applying it to a story efficiently. We, therefore, had come as a team to answer a few questions that would help get that shared understanding within a story:

What is the purpose of the story?

How will we build it?

How will we test it?

Who can verify we have built the right thing?

How will I know if this change that we pushed was successful?

When we started answering these questions, it helped us bake quality within the story. As it became second nature to us, we gradually weaned off answering these questions and focussing on how to break stories into smaller pieces while still following our general principles of being testable and providing value.

Why have I given User Stories so much importance?

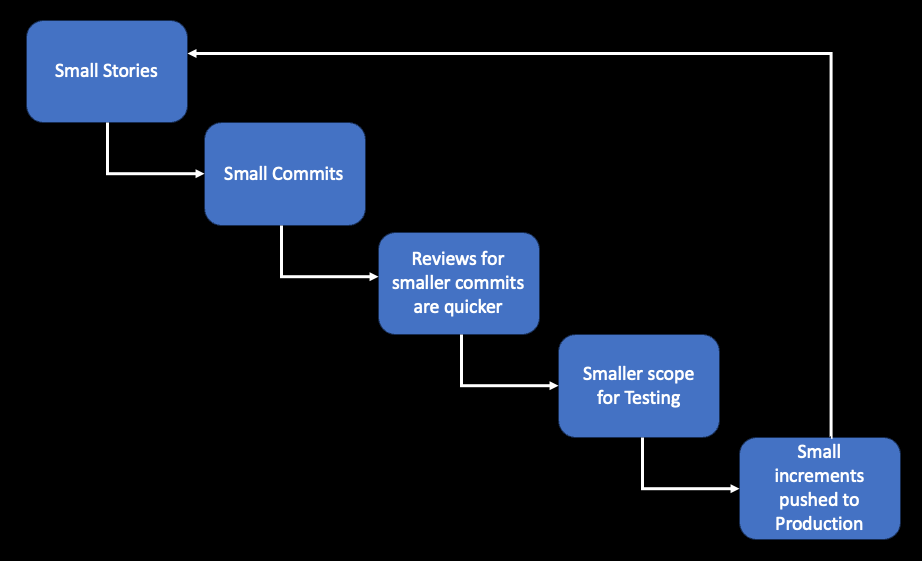

If our end goal is to get small changes into production daily that we can monitor and perform experimentation on and quickly make changes, having user stories built, as mentioned above, is critical. If you have good software devs, they commit small changes into production daily behind feature flags, and when things are ready, they turn on the feature. Still, defining stories that are small, testable and provide value to users is equally important. It gives developers the focus to make these small commits, which helps us push out quality code faster.

Would love to hear everyone's thoughts on what they have used to ensure even before beginning to build the product, the team is aligned on what exactly they are building. I would love to hear from you in the comments if you have any examples or tools you have used to get alignment across teams. I’ll talk next week about how we could use some quality approaches to build quality within the technical solution if there’s an interest in this.

Final Thoughts

Although I have said above that User Stories need to be fairly detailed, it doesn’t need to be the case for all teams. Sometimes a User Story could just be a one liner to get the conversation started. Small teams that I have worked with, who are great at collaboration can then work together to build the story and validate it in real time. So it really depends on how teams like to work with each other.